|

|

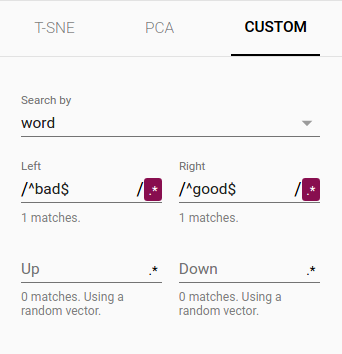

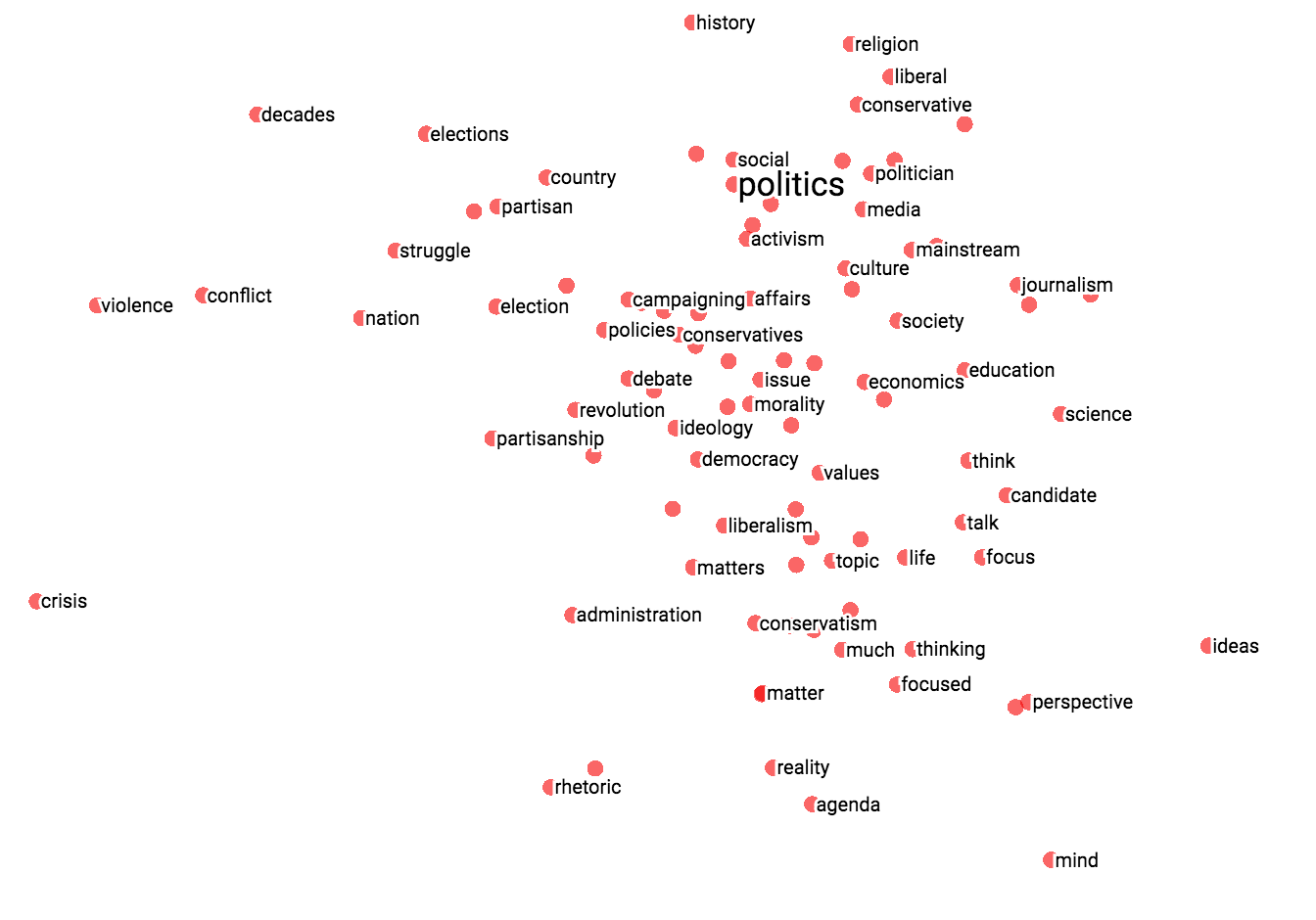

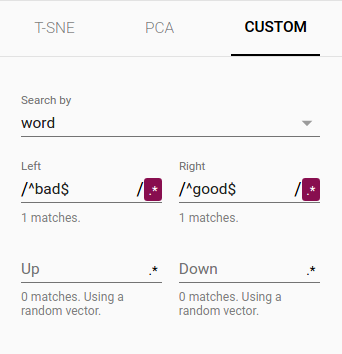

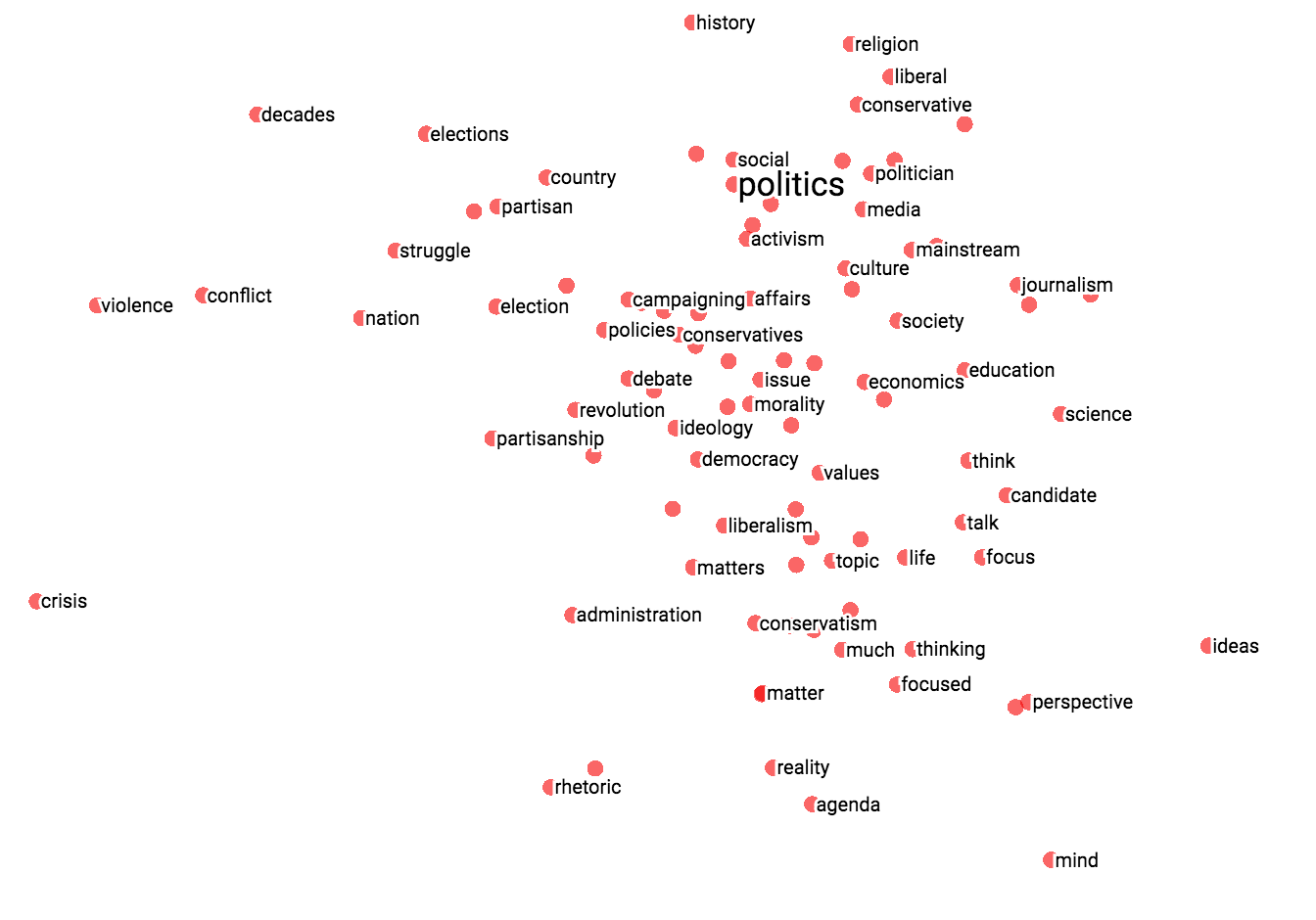

| Custom projection controls. | Custom projection of neighbors of "politics" onto "best" - "worst" vector. |

### Metadata

If you are working with an embedding, you'll probably want to attach

labels/images to the data points. You can do this by generating a metadata file

containing the labels for each point and clicking "Load data" in the data panel

of the Embedding Projector.

The metadata can be either labels or images, which are

stored in a separate file. For labels, the format should

be a [TSV file](https://en.wikipedia.org/wiki/Tab-separated_values)

(tab characters shown in red) whose first line contains column headers

(shown in bold) and subsequent lines contain the metadata values. For example:

### Metadata

If you are working with an embedding, you'll probably want to attach

labels/images to the data points. You can do this by generating a metadata file

containing the labels for each point and clicking "Load data" in the data panel

of the Embedding Projector.

The metadata can be either labels or images, which are

stored in a separate file. For labels, the format should

be a [TSV file](https://en.wikipedia.org/wiki/Tab-separated_values)

(tab characters shown in red) whose first line contains column headers

(shown in bold) and subsequent lines contain the metadata values. For example:

Word\tFrequency

Airplane\t345

Car\t241

...

The order of lines in the metadata file is assumed to match the order of

vectors in the embedding variable, except for the header. Consequently, the

(i+1)-th line in the metadata file corresponds to the i-th row of the embedding

variable. If the TSV metadata file has only a single column, then we don’t

expect a header row, and assume each row is the label of the embedding. We

include this exception because it matches the commonly-used "vocab file"

format.

To use images as metadata, you must produce a single

[sprite image](https://www.google.com/webhp#q=what+is+a+sprite+image),

consisting of small thumbnails, one for each vector in the embedding. The

sprite should store thumbnails in row-first order: the first data point placed

in the top left and the last data point in the bottom right, though the last

row doesn't have to be filled, as shown below.

| 0 | 1 | 2 |

| 3 | 4 | 5 |

| 6 | 7 |